Ever get the feeling that someone, somewhere is watching your every move? The rise of modern technologies of surveillance is often overlooked, however, many people believe it can pose a threat to our personal liberties. Contemporary designers opposed to this invasive technologies develop devices and accessories to prevent the society from ending up as a dystopian militarized environment.

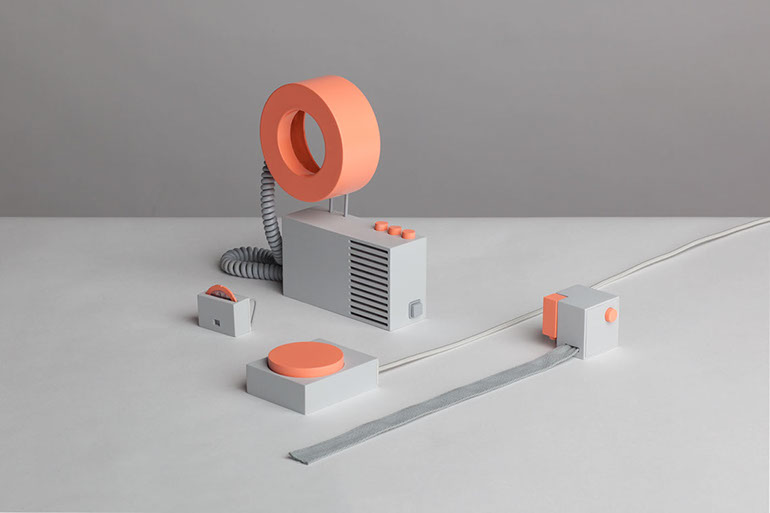

Two Master Design students Katja Trinkwalder (Netherlands) and Pia-Marie Stute (Germany) have designed a series of add-on accessories for those who are concerned about surveillance and their data security. Ironically called Accessoires For The Paranoid, the project explores an alternative approach to data security through four different “parasitic” objects producing fake data and hiding users’ true data identities behind “a veil of fictive information”.

Accessoires For The Paranoid by Katja Trinkwalder and Pia-Marie Stute

The designers point out that we have long become habituated to trade-offs in which “free” services are offered in exchange for some bits of our personal data. However, with the comfort of automation comes a danger of our connected devices collecting, storing and processing our personal data every day.

The four accessories include a webcam that projects fabricated scenes, a device misleading Alexa, and buttons that generate fake online data and user patterns.

Accessoires For The Paranoid by Katja Trinkwalder and Pia-Marie Stute

Object A would be appreciated by anyone who has ever put a sticker on the webcam of their laptop. It takes the principle of a toy camera and applies it by displaying different scenes through the glance of the webcam.

Accessoires For The Paranoid by Katja Trinkwalder and Pia-Marie Stute

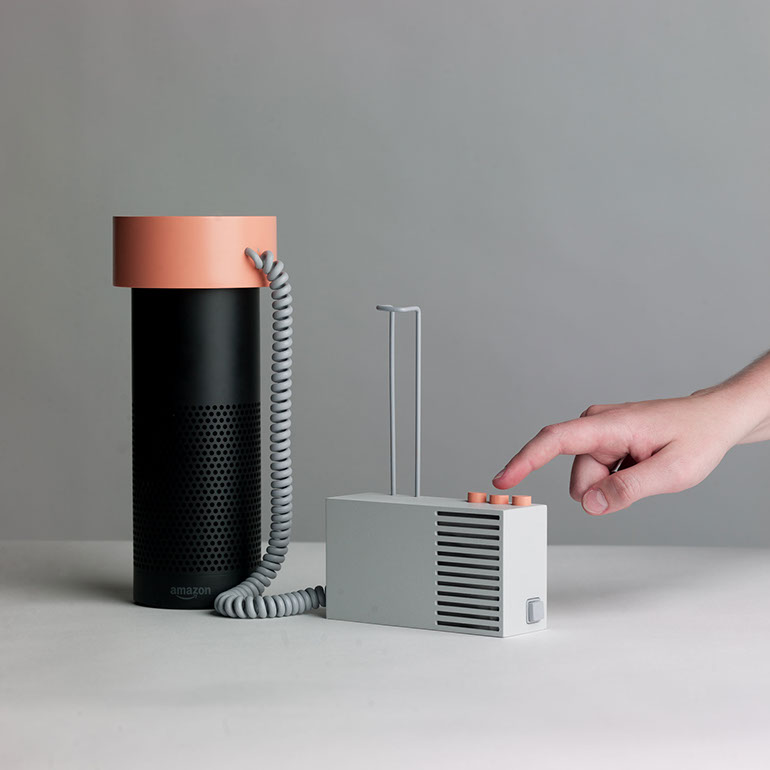

Object B deals prevents Amazon’s voice assistant that constantly “listens” to what is going on the user’s home whenever switched off from undesirable processing and collecting the data about her user and their surroundings. The device confuses Alexa’s algorithm with fake information, such as pre-recorded quests to occupy her with tasks far from the user’s actual interests or dialogues from random movie scenes, or merely numbs her with white noise.

Accessoires For The Paranoid by Katja Trinkwalder and Pia-Marie Stute

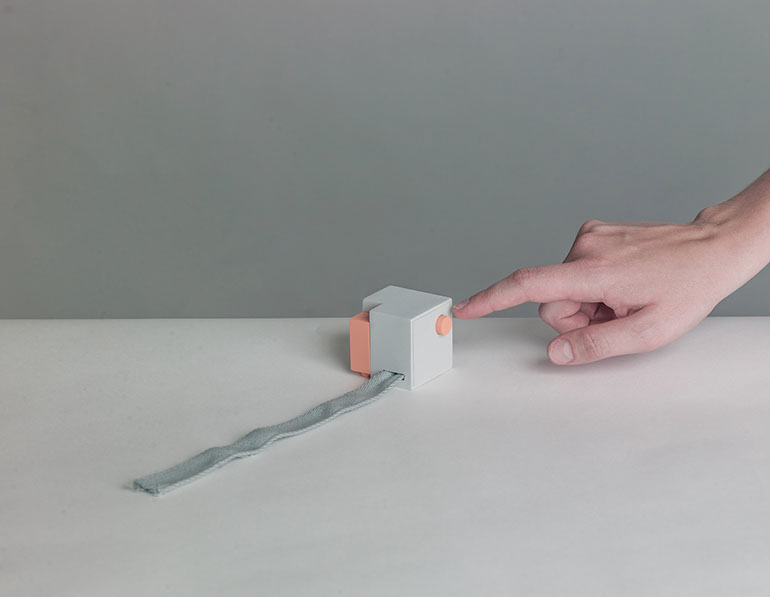

Connected to any computer, Object C will generate fake online data. On the push of the button, an algorithm will randomly create site-specific content on the websites of services such as Google, Facebook, Youtube, Twitter or Amazon. A wish-list on Amazon will be filled with unexpected interests, for instance, or the user’s Facebook account will spread indefinite likes.

Accessoires For The Paranoid by Katja Trinkwalder and Pia-Marie Stute

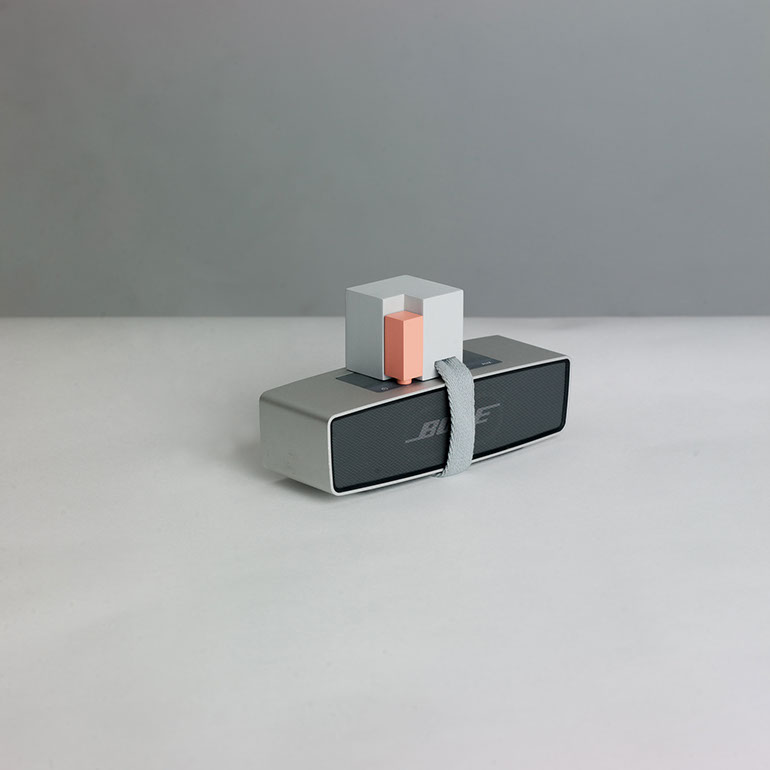

If activated, Object D will push the buttons of any connected device that is able to collect data, thus creating blurred user patterns during nighttime or when the user is not home.

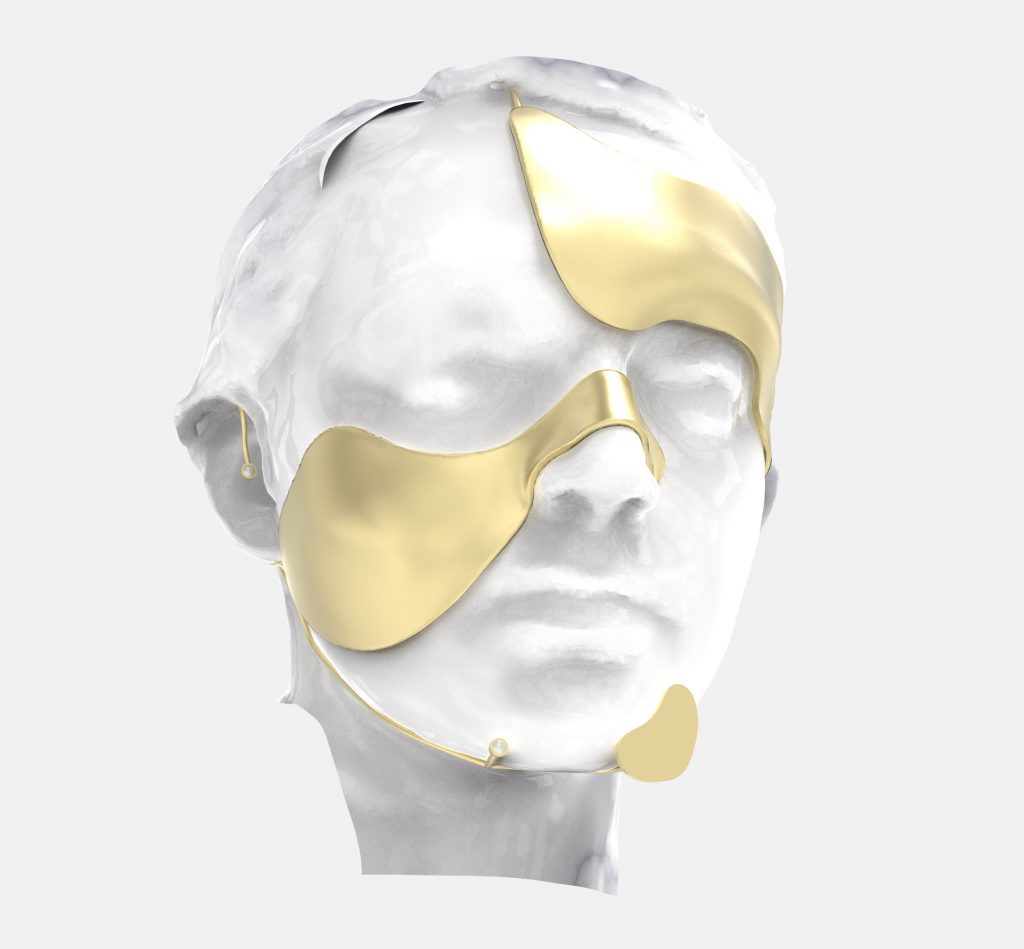

Incognito by Ewa Nowak

Ewa Nowak, Polish designer and half of the design studio Noma, along with fellow designer Jaroslaw Markowicz, has felt violated by the proliferation of face recognition technology. With cameras able to recognise our age, mood, or sex and precisely match us to the database, the concept of disappearing in the crowd ceases to exist. As a reponse to that, Nowak has developed an accessory aimed to fool AI systems used in security systems, public space cameras and online social media without concealing the user’s features.

Incognito by Ewa Nowak

The anti-AI Incognito face mask is a contemporary piece of jewellery made of brass that subtly covers the key elements of the face, preventing facial recognition algorithms from properly ‘reading’ one’s features. The mask slightly resembles ancient tribal masks. According to the designer, the form almost entirely resulted from the function of this object. Composed of geometric shapes deflecting face recognition software, Incognito is worn like glasses, with arms reaching around the wearer’s ears.

Incognito by Ewa Nowak

To ensure the success of Incognito, Nowak tested the mask using Facebook’s DeepFace algorithm, which accurately identifies the people pictured and asks if you’d like to tag them.

Owell collection by Sara Sallam (also header image; via trendhunter)

Nowak is not the only designer to create objects as a response to the growing use of surveillance. Brooklyn-based designer Sara Sallam noticed that with tracking technology advancing so rapidly, Nowak’s mask was not effective against certain types of recognition. To fix that, Sallam has created a provocative collection of jewellery and accessories to protect the wearer from tech-enabled surveillance.

Named Orwell to pay homage to the author’s famous dystopian 1984 novel, Sallam’s series features three chic pieces that can be worn on the face, chest and foot in order to block facial recognition, heartbeat detection and gait tracking algorithms.

Owell collection by Sara Sallam (via trendhunter)

The featured face piece obscures key facial geometry – the area where the nose and eyebrows meet and the chin – and decreases the facial symmetry, while its reflective material distorts patterns of light and dark across the face. When tested against Amazon Rekognition, the facial recognition software most commonly used by the American police force, the jewellery lowered the software’s “confidence rate” in identifying someone from zero to 92 per cent. Anything under 99 per cent can’t be used in court as the US laws stand.

Owell collection by Sara Sallam (via trendhunter)

The armour-like chest piece protects against heartbeat detection technology – a very recent technology, owned by the Department of Defence, which uses lasers to detect heart rhythm on the skins surface.

The final shoe accessory, inspired by the old trick of placing a pebble in your shoe, works by interrupting gait recognition technology, which, according to the designer, has an 80 per cent accuracy rate in identifying an individual.

Owell collection by Sara Sallam (via trendhunter)

The objects are not only functional but also stylish. They have an incredibly captivating aesthetic and allow the wearer to be identifiable to the people around them. Unlike some of the anti-tracking solutions that change the user’s appearance to the point of grotesqueness, Orwell is visually appealing. Each piece has a ripple-like finish reminiscent of pearl as a reference to Lover’s Eye jewellery, miniature paintings popular in the 1700’s that featured an eye, often surrounded by pearls.

Find more solutions protecting your privacy and data security here